I like teaching.

Teaching has been my most formative experience at Penn. I don’t have much life advice to give, but if you’re a prospective student or a current student reading this, hear me out: teach at least once as an undergrad. You won’t regret it.

Every year, I make time for at least one teaching project to keep myself sharp. This is my way of fending off “academic bulimia”. I’ve been a teaching assistant (TA) for CIS 160 (Mathematical Foundations of CS) and MGMT 291 (Negotiations). But I wanted to throw myself into the deep end in my senior year, so I built up a massive project as the culmination of my teaching progress.

This semester, I created and taught a class from scratch: CIS 700-004 (Deep Learning). I’m the creator and lead instructor, and I co-lecture with Professor Konrad Kording and postdoc David Rolnick.

The Motivation: Why a 2nd Semester Senior is Doing Work

Senioritis is a very real thing. I originally had 3 classes planned for my last semester. Beautiful stuff. Instead, I now find myself logging 20-30 hours each week for this class. What kind of masochist would do this?!

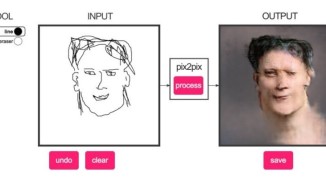

An online web tool that uses generative adversarial networks to embed images in new styles.

The idea of starting this class took root when an underclassman once asked me how I learned about deep learning. I wished I could have said I learned from raw study of math textbooks or reading academic publications or following ML blogs. Or from anything systematic. My answer was embarrassingly silly: I picked up deep learning by talking to people who know a lot and have interesting project ideas. Shoutouts to Tanmay Chordia (M&T ’19) and Sahil Singhal (M&T ’17).

I knew there had to be a better way to learn than wandering into interesting conversations. And, I knew Penn is uniquely suited to teach a class on deep learning because of its heavy focus on intersectionalism (see: the Jerome Fisher M&T Program). What better subject for a Penn class than deep learning, which is the confluence of optimization, linear algebra, probability, algorithms, neuroscience, data science?

Frankly, I was surprised Penn had never offered a deep learning course before. The notion of creating an organized opportunity to promote deep learning education propelled me to design and teach CIS 700-004.

The Class: Going Deep on Deep Learning

Deep learning is a field of machine learning (ML) that uses neural networks to solve extremely complex modeling problems. My class is a graduate-level survey course of all the neuroscientific intuition, mathematics, implementation tools, and domain knowledge necessary for students to successfully apply deep learning to their data science problems.

Students in the course learn to implement models in PyTorch – a program that can do some pretty amazing things. We build models that can:

- Read handwriting with feedforward neural networks

- Identify celebrities with convolutional neural nets

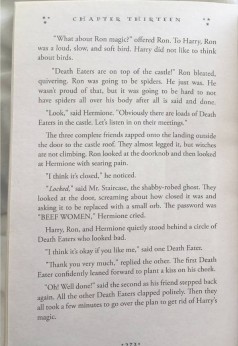

- Write sequels to Harry Potter using generative LSTMs

- Edit Nic Cage’s face into any video using generative adversarial networks

- Generate bogus scientific articles using transformers

A tongue-in-cheek application of deep learning mimicking JK Rowling’s writing via a generative LSTM.

Many of the models sound like straight-up magic at first. But, we also teach which problems deep learning canand cannot solve (I try not to over-hype deep learning and present the math as it is).

The Methods: How I Teach

I want my class to be useful. Every facet of my teaching is designed around the notion of applicability.

Curriculum: The curriculum is designed to be most useful to data scientists in the long run.

There’s a balance between (1) presenting mathematical intuitions on how all deep learning models work and (2) presenting specific “hot” deep learning models that are currently state-of-the-art. Only teaching math leads to students who aren’t familiar with the cutting edge (thus, unemployable students). Only teaching “hot” models ensures class material will be outdated and ungeneralizable within a few years, (thus, soon-to-be-unemployable students). I walk a very careful balance between the two.

Lecture style: Every lecture presents information through slides, whiteboard notes, and code investigation. The rationale is that the course needs to cover the three pillars of data science:

- Problem context (i.e. where has deep learning been successful?)

- Inductive biases (what mathematical intuitions do we have for why deep learning works), and

- Implementation (how do software frameworks enable ML practitioners).

Teaching each of these ideas coherently requires different media.

Variety is also more effective than monotony. An hour and a half of Powerpoint fails to teach anything specific, and 90 minutes of chalk talk can put a whole lecture hall to sleep. I won’t describe the eldritch horror of 90 minutes of live coding.

Student work: The class evaluation closely mimics the evaluation of ML projects in the tech industry. Our final project simulates the data science process: students find/create their own dataset, perform a battery of deep and non-deep models, and write up reports for both a technical audience and a non-technical audience. The goal is for students to become full-fledged ML scientists ready to tackle real-world problems with their intuition and skills.

Some students have already begun using course principles. I’ve gotten feedback that students applied our first homework to research, senior design projects, and independent work!

The Results: Do People Want to Learn this Stuff?

A full house during the week 6 lecture on ResNets.

Since I’m a first-time instructor, CIS 700 was originally open to 40 students. I deliberately made the prerequisites for the class extremely stringent: we only considered students who’d taken a graduate machine learning class with software or data science work experience.

And yet we got over 150 applications for the class waitlist. (!)

Penn students are dedicated. Even though I could only enroll a fraction of the student demand, the class consistently has between 80 and 120 students each lecture (I’m impressed that there are more auditors than enrolled students). Our teaching team had to scramble to find a way to accommodate the sheer number of bodies. We now comfortably fill up an entire auditorium in one of Penn Medicine’s buildings.

What I’ve Learned: The Good, the Bad, and the Rewarding

There are many reasons teaching is rewarding. Any TA could probably list a dozen without stopping to draw breath:

- Lecturing is fun

- Helping students achieve their potential is really satisfying

- Teaching the material ensures you know the subject through and through

- It’s the best-paid student gig on campus

- The teaching community is passionate

- It’s a great way to get to know professors better

- Teaching improves your communication skills

But teaching CIS 700-004 has been a very different experience than my previous TA work as I’ve had more ownership over the end product. Hence, I’ve picked up a new set of skills and perspectives from my work this semester.

I’m more responsible. Being at the helm of the class has been an exercise in accountability. I’m now responsible for my students’ learning outcomes, so decision-making is both freer and more of a burden. How should I handle late enrollees? What kind of late policy should I enforce? How should I distribute deadlines throughout the semester? No one’s handing me guidelines anymore. That’s a scary mix of exciting, rewarding, and overwhelming.

I’m gesturing excitedly about skip connections. (Right) The students fill up most of the auditorium. Photos by Johnathan Chen (M&T ’19). Funnily enough, Johnathan will be teaching a minicourse on photography this semester.

I’m better-informed. Teaching the class has motivated me to keep up-to-date with deep learning innovations. Since I created the syllabus last November, there have been several ground-breaking (read: syllabus-wrecking) discoveries. For example, the recent popularity of transformers like BERT and GPT-2 have rendered many previous models in sentence embedding, machine translation, and text generation completely obsolete. I’m continually reading papers and refactoring my lesson plans to make sure I’m delivering useful content.

I’m (gag, retch, cough) more detail-oriented. I’ve 10x’d my proofreading skills. I made a multiplication mistake in lecture once, and it took an in-class retraction, an online clarification, and several office hours sessions to clear it up. I now work with a healthy mix of caution and paranoia.

I hate spring break and snow days now. For the first time in my life, I have more work as the result of a snow day, and spring break has been an inconvenient pain-in-the-syllabus since day one. At this rate, I’ll be hating puppies and rainbows by the end of the semester.

I really am looking forward to the next two months. The natural language processing module of the course will be a hoot and a half, and I can’t wait to see what students create with their final projects.

See our course website if you’d like to follow the class!

Jeffrey Cheng is a computer science major concentrating in business analytics, and he is additionally pursuing a Master’s degree in data science. He is a member of the Class of 2019 and will continue his dedication to machine learning as an ML strategist at Palantir after graduation. His favorite thing about the Jerome Fisher Program is that “(t)he community generates a lot of interesting discussion around tech and its potential. I’ve learned way more from talking to my peers than from any class”.